- jaypeters.dev

- Posts

- Bugs, Skool and a GPT VA

Bugs, Skool and a GPT VA

The Weekly Variable

App support is in full swing.

Skool is going better than expected.

And I can’t decide of OpenAI made this all obsolete or not.

Topics for this week:

Production Issues

With Wave now live, the bug reports started rolling in.

It’s to be expected but still stressful even when I know it’s to be expected.

Doesn’t help that I’m back to being the entire tech team.

At my first job, for a time, I basically was “the web team”, with me handling a majority of the coding and operation and any issues that came up while my manager handled all the administrative and planning and tried to keep our work scope in check.

So it’s been about a decade since I’ve worn all the hats.

On the one hand, it’s nice to have complete control.

I own the entire code base so I generally know where the issue is happening and I can make changes whenever I want.

I don’t have to dig through 3 to 5 separate, constantly-changing repositories of hundreds of thousands of lines of code each to find out who actually owns the issue, then try to convince that team that this is a problem and needs be to prioritized for a hotfix, which then requires a case to be submitted and approved by Product and Management so that the release management team can review it and get approval for an unplanned release to production.

And I’m sure I missed some steps in that process.

Instead I can update the problem, create a new build, and submit to Apple for approval.

By morning, Apple will have approved the build, and I can update the App Store with a new version of the app.

On the one hand, I get that control.

On the other hand, I get all the blame.

No hiding in corporate red tape or philosophical blame-shifting questions like “is leadership really to blame?” or “is this a systemic problem?”.

Just two big bugs, all on me (and maybe Gemini or GPT).

Out of date dependencies in the build were causing Wave to not load for some people, but not everyone.

A quick update fixed that thankfully - no more black screen after opening.

Pro tip: always run npx expo-doctor if you’re building an Expo app 🤦♂️

That fix was released Tuesday.

And for the second one, I did not realize I had a race-condition where the service URLs wouldn’t always load in time, so they would default to the test URLs, which won’t work outside my computer…

With those services not working, that meant people couldn’t complete their profiles because the image upload wouldn’t work, and having an image is required for your profile at the moment.

Restarting the app would reload the proper URLs because they had now been saved, but not great for that to happen the first time someone tries to use the app.

Updating the code to wait and making the backups not test URLs solved that issue for the second release.

Two major issues resolved for Wave this week.

Hopefully there aren’t too many more nasty bugs hiding but we’ll see 🤞

Sorry if you tried the app and ran into these issues!

It should be all good now.

On to Android next week!

Automatic Shorts - Part 3

Once Wave calmed down, I finally managed to get the third part of Automatic Shorts uploaded to YouTube.

I thought part 3 would be the shortest.

I already had the clips, just needed to combine the individual video files into one video file.

But I forgot how tricky loops in n8n can be, so it took a little while to brush up on how to continue the workflow after a loop.

Once I had that working, there’s lot of room for adjustment with captions so I made a few attempts to get decent looking subtitles to display on a video.

The final result was right in line with the rest of the series, about 45 minutes per video.

With the conclusion of the series, though, this is a decently powerful and cheap system for creating shorts out of a full video.

I’m sure I could improve the prompting of GPT-4o because it isn’t the best at finding clips automatically based on the transcript, which even the paid solutions like opus.pro still struggle with, although I haven’t tried it in a while now.

But I do want to try this system with o3 instead and see if it takes a little more time to find better transcript chunks.

Plus o3 costs the same as 4o now which is kind of insane so it wouldn’t be a huge investment.

Even with plain old GPT-4o, building the system and recording the series was a great learning experience, and hopefully others will find it interesting if nothing else.

And a nice way to kick off a Skool community!

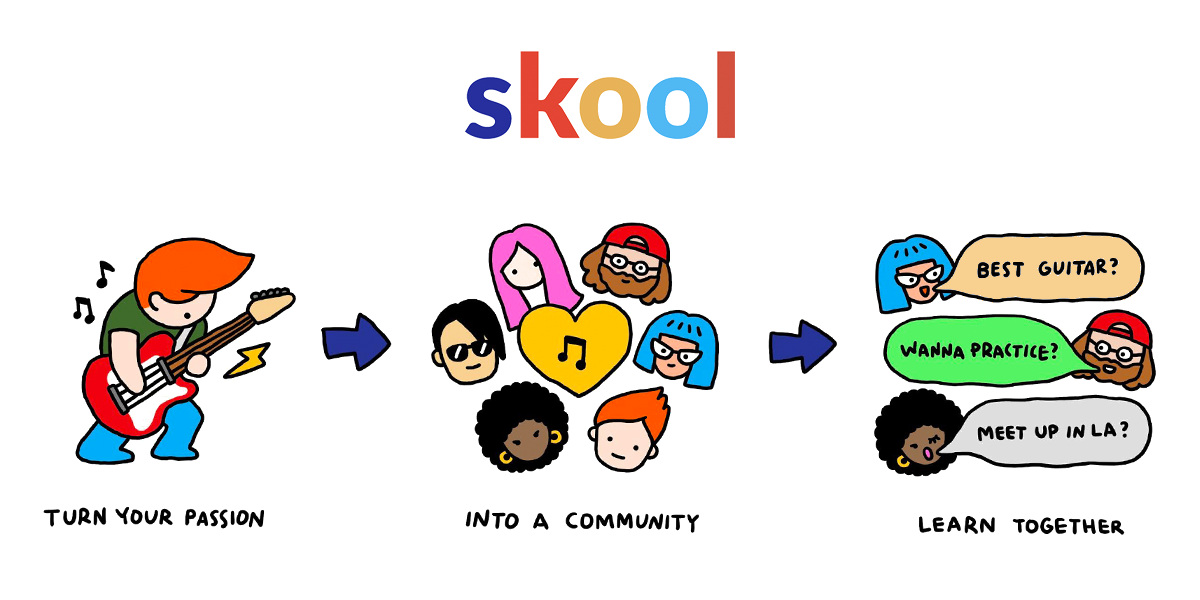

Back to Skool

I’m quite pleased with the progress of Learn Automation and AI after just one week.

25 members so far, up from 24 earlier this morning!

I’ve had a few people joining per day, which is great, but I wasn’t sure how active people would be.

Yesterday was the first day any of my posts got any activity so that was exciting, a bunch of likes and a couple comments.

And beyond that, I’ve had 3 different requests for various systems to build in n8n, which is exactly what I was looking for.

Easier to make tutorials based on request rather than try to guess, at least initially.

Eventually it will become a stats game to see what’s most requested, but this is plenty to work with.

And it’s great to see some genuine engagement with real comments and messages.

Looking forward to spending more time in Skool and hopefully growing a decent community, especially since it’s off to a great start.

Also have to throw out an affiliate link since they just started a $9 per month plan if you want to start your own community.

I’d be happy to join and help out:

Gemini CLI

Gemini CLI in the terminal

I finally gave Gemini CLI a quick try, and…

we’ll see.

It’s very powerful and convenient.

Easy to setup, a few commands and I had it connected to my repo, able to read all the code and get started working on tasks.

The only problem is bandwidth.

I tasked it with adding some new unit tests and it struggled for a little while, deciding it would start over, then it hit a context limit and said I’d have to downgrade to Gemini Flash from Gemini Pro unless I hooked up an API key to start paying for it.

I’ve gotten spoiled by the generous free usage through the browser so I’m not sure I’m ready to commit more money to it yet.

It was kind of wild at one point, I had Gemini CLI working on unit tests in the background, while I was having EAS build a new version of Wave, while I also had GPT helping me strategize Skool, all at the same time.

Multi-tasking to the max.

It may be worth throwing a little money at Gemini to have it create new pull requests for me in the background while I do other things.

Effectively hiring a decent contractor for very cheap.

It’s roughly $2 per 1 million tokens but I have no issue frequently sending in 200k+ token requests so it could get a little pricey fast.

With proper strategy it could be pretty reasonable, though.

I might have to use AI further to strategize my AI usage.

Even though it didn’t produce any results for me yet, I would still highly recommend checking it out, if not for being able to start easily and for free.

GPT Agent

OpenAI livestreamed the new Agent mode for GPT just yesterday, and I only got access last night so I haven’t tried it yet.

Basically it’s an improvement on “Operator” where they’ve given a model, assuming o3, access to it’s own virtual machine, so it can now browse the web like a human would, or scan the web through code like it does with Deep Research.

They’ve tried to train it to use both approaches when it needs to, and be able to execute it’s own code, so they basically have given an AI complete access to a fully internet-enabled computer.

Their demos were planning for a wedding, and generating slides for a presentation based on documents, which heavily suggests this could become a proper virtual assistant.

Since it runs on it’s own machine, you can give it a task and let it go, it can come back with results later or ask questions or request approval before it buys anything if you give your credit card.

But I can’t help but feel there’s some massive potential here.

It make me wonder if this thing could operate a business nearly on it’s own.

Going to see some wild videos about it on YouTube, I’m sure.

I’ll be anxious to see how well my new GPT assistant does this weekend.

Dead internet theory becomes even more of a reality, and I’m completely ok with it.

And that’s it for this week! Another newsletter all about the future: streaming, AI, crypto and divs.

Those are the links that stuck with me throughout the week and a glimpse into what I personally worked on.

If you want to start a newsletter like this on beehiiv and support me in the process, here’s my referral link: https://www.beehiiv.com/?via=jay-peters. Otherwise, let me know what you think at @jaypetersdotdev or email [email protected], I’d love to hear your feedback. Thanks for reading!