- jaypeters.dev

- Posts

- Engineering Management, Declining AI, and Tokens

Engineering Management, Declining AI, and Tokens

The Weekly Variable

Quiet week personally, but AI continues to do it’s thing.

Topics for this week:

Software Engineering Manager

It’s only been 2 and a half years since I left full-time employment as a software engineering manager.

At that time, humans still did all the coding, and I think I used GPT maybe once a week.

I was super excited at the potential of GPT and other LLMs, but it wasn’t quite to the point of everyday usefulness.

I would remember to ask it questions occasionally or maybe play around with a simple API connection, but nothing I was dependent on.

Fast forward 2.5 years later, and now when I go to build something, the first thing I do is map it out with AI and have it mock up the code for me.

I don’t write nearly as much code as I used to, I do make manual tweaks here and there, but I produce and review way more code than ever before.

I can spin up an entire codebase with a single prompt.

With AI becoming extremely capable at coding, being an engineer suddenly becomes much less about writing the code and more about managing it.

Depending on the model, basically all engineers now have an infinite army of junior- to senior-level developers at their command to produce whatever they are told to produce.

A core part of the job has been outsourced to a virtual agent available 24 hours a day, 7 days a week, that doesn’t care about a promotion.

Since most of the writing of code isn’t necessary any more, the engineer is left to focus on higher levels of the software lifecycle.

The engineer is still engineering the code, but in a completely different way, and spending more time managing that code then engineering it.

It’s an interesting time to be alive to see this transition from something that seemed so universal be completely shifted in a matter of months.

Pretty soon the term software engineer will mean something completely different than it did just 3 years ago.

Less Code Red

OpenAI continues to roll out the features as they try to compete with Google’s gradual AI dominance.

GPT-5.2 rolled out last week, and they updated their code specific models “Codex” with GPT-5.2-Codex.

This is seems like an incremental update from GPT-5.1.-Codex-Max which just came out a few weeks ago, but they claim improvements in cybersecurity understanding, as well as better tool calling and ultimately it’s better at working on large scale tasks for long periods of time without hallucinating into unnecessary changes.

The previous Codex version claimed the ability to focus on a task for up to 24 hours so an improvement past that would be truly impressive.

On top of that, OpenAI also launched GPT-image-1.5 to compete with Google’s Nano Banana Pro.

GPT Image 1.5 vs 1

It’s wild to see the comparison directly.

The old model image on the right doesn’t look bad, but it does feel a little off, missing some sort of realistic element.

The image from the new model on the left looks much more like a real photo.

Not sure I’d spot that as AI on first glance.

One thing GPT models in general are particularly good at is following instructions.

This example was pretty impressive:

Instructions for a 6×6 grid of unique items

Given the list of 36 items, GPT was able to generate an image that contained all the items as specified.

All items correctly rendered from the list above

Following instructions is easy to take for granted until the model stops doing what you told it.

Really impressive to see that level of depth.

I’m not sure they’ve beaten Nano Banana Pro yet but I’m sure they’ll be getting close as they continue to play catch-up.

More releases from OpenAI coming soon I would imagine, but they weren’t the only ones to release more new AI models this week…

Google’s Lead

After having their search dominance shaken to it’s core, Google has responded impressively well to the AI race.

Gemini 3 Pro is a super powerful model that upgraded an already leading model of Gemini 2.5 Pro.

And offer a smaller, faster and cheaper alternative, Google updated their flash series of models with Gemini 3 Flash on Wednesday.

The Pro model does the heavy lifting, using a good amount of reason tokens to think through solutions before spitting out an answer, and Flash models can use reasoning tokens as well, but that’s not really the intent.

Given the name, it’s primary intent is speed.

And it’s already reaching the top of the leaderboard, not far behind Gemini 3 Pro.

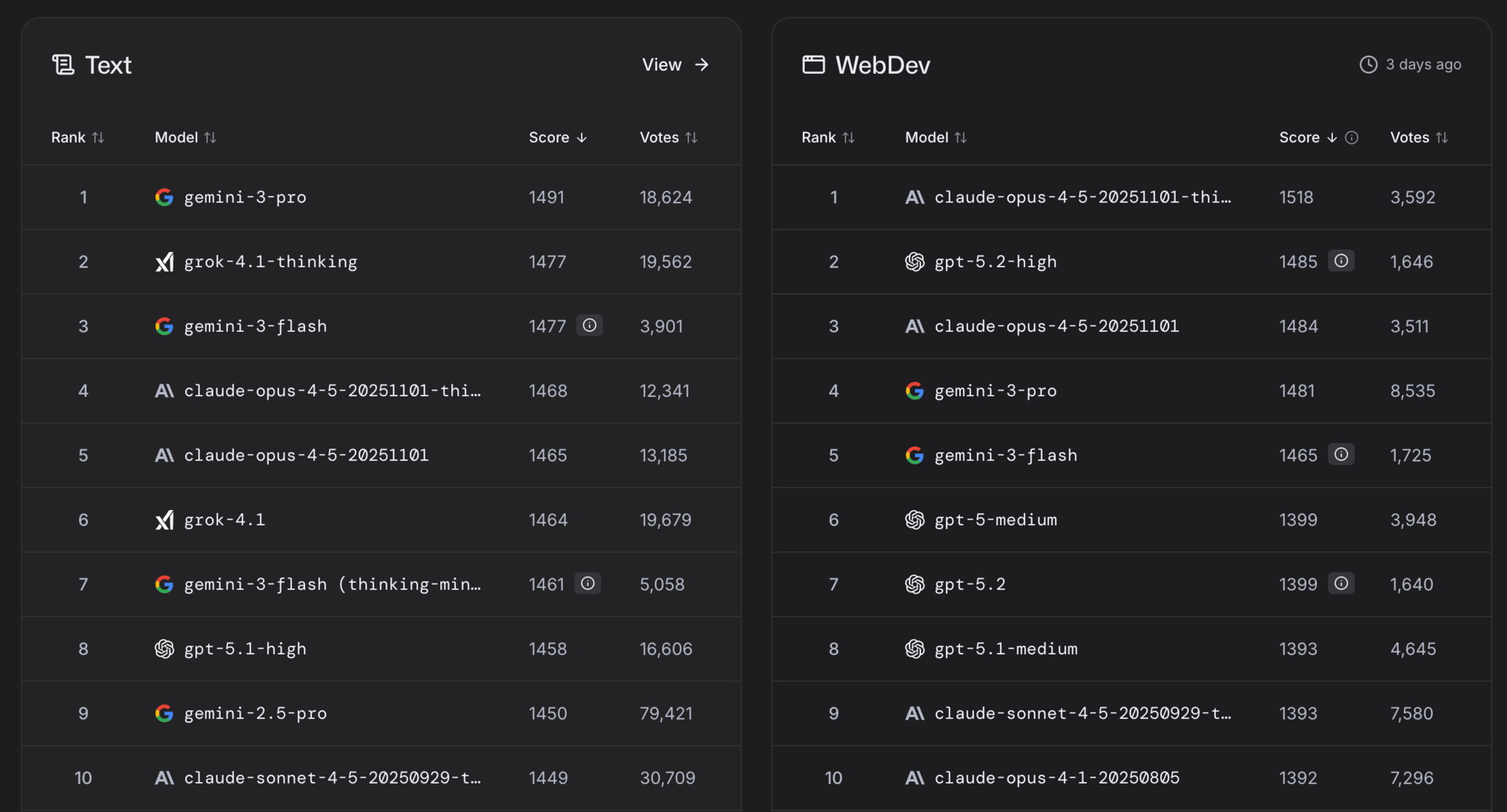

gemini-3-pro rank 1, grok-4.1-thinking rank 2, gemini-3-flash already rank 3

With the release of flash, Google has 2 models in the top 3 for text and in spots 4 and 5 for WebDev.

gemini-flash-3 in 3rd for Intelligence, 2nd for speed, 5th for price

Artificial Analysis has Gemini 3 Flash ranked highly for Intelligence, Speed and Price as well.

The flash models aren’t as great for chatting or deep development work, but they are really great for digesting data and quick answers.

A good all-around model for an API.

And not too big of a price jump from flash-2.5: up $.20 for input and up $.50 for output.

We’ll see what other models release in the next few weeks, hopefully these poor engineers get a chance to take a break for the end of the year.

But for now Google is doing it’s best to be a top contender in the AI race.

Opus Decline?

Saw this on my feed the other day:

Basically someone saying they’ve seen a noticeable decline in the quality of output from using Claude Opus 4.5 through Claude Code.

This seems to be a recurring pattern with a lot of the giant AI models.

I remember people talking about a similar issue with GPT 5.

It started out super smart when people had early access, but when it went to public release, it seemed to decline in performance quite a bit.

And a few others have been claiming the same with Claude Opus 4.5.

It seems noticeably less performant.

There’s some crazy inference architecture happening behind the simple idea of sending a request to an AI and getting an answer back.

I would imagine a number of services and connections are happening as part of the process to run text through a Large Language Model, and with the insane amounts of traffic these companies are getting, I’m sure they having to shift resources around constantly.

But it’s an interesting pattern that there may be a slight marketing angle to it as well.

Deploy more resources to the newest model so it performs the best and looks the best when people are reviewing.

But after a few weeks, slowly start to shift the resources away from the big marketing focus and on to other projects…

Doesn’t seem completely out of the question, especially in the current war for GPUs.

I haven’t been using Opus 4.5 much lately so can’t speak to my own experience, but I hear almost nothing but good things.

Hopefully this is just a temporary, even subjective observation.

And maybe just aspect of the AI world where sometimes the AI won’t be as smart as other times which I have noticed.

Certain times of the day, GPT gets noticeably slower than other times.

Huge demand for these services so I can’t image the continual struggle to support them.

Some day I’d love to learn how these AI services operate at scale but for now I’m happy to speculate based on social media comments.

And hope that temporary AI underperformance remains temporary.

So Many Tokens

I caught this clip on X and it’s kind of hard to comprehend.

Sam Altman talks in rough numbers:

10 trillion tokens per day from one big AI company

a human produces about 20,000 tokens per day

8 billion people on Earth * 20,000 = 160 trillion

One company produces almost as many tokens as all humans produce naturally.

So companies are individually reaching the same information output levels as all of humanity which is wild to think.

Before the AI crazy, we were in the “big data” era.

Companies were producing more data than they knew what to do with, but couldn’t possibly consume it all, so it became “data science” to try to figure out what data was actually important and what could be ignored.

Then AI comes along and compresses all that data into something that you can talk to.

But it can also now produce more data than ever before.

Almost 2 steps forward and 1 step back.

And it’s interesting to hear the shift in thinking about information.

I do see this becoming a subtle transition where things are looked at from an AI perspective.

He didn’t say words spoken, he said tokens.

Humans produce 20,000 tokens per day.

Weird to hear now, but I bet it might be the norm in the next few years.

We’ll see.

We’re many trillions of tokens away from that happening soon, but it may be sooner than expected.

And that’s it for this week. AI is shifting engineering and maybe perspectives altogether.

If you want to start a newsletter like this on beehiiv and support me in the process, here’s my referral link: https://www.beehiiv.com/?via=jay-peters.

I also have a Free Skool community if you want to join for n8n workflows and more AI talk: https://www.skool.com/learn-automation-ai/about?ref=1c7ba6137dfc45878406f6f6fcf2c316

Let me know what else I missed! I’d love to hear your feedback at @jaypetersdotdev or email [email protected].

Thanks for reading!