The Weekly Variable

It’s hard to keep up with everything happening in AI and the scary part is that it’s only going to get faster.

Here is a huge update on all things AI from Google and OpenAI:

Dethroned Already

Surprisingly, or maybe unsurprisingly, Sora has already been dethroned as the top text-to-video model.

Google revealed Veo 2 this week and it is noticeably more realistic than the videos showcased by Sora.

It’s weird to keep saying that videos are looking more and more realistic, but Veo 2 has a more grounded appearance while Sora tends to look more stylistic.

I couldn’t quite put my finger on it before, but now that I’ve seen more consistently lifelike results from Veo 2, it’s more obvious that Sora is less consistently realistic.

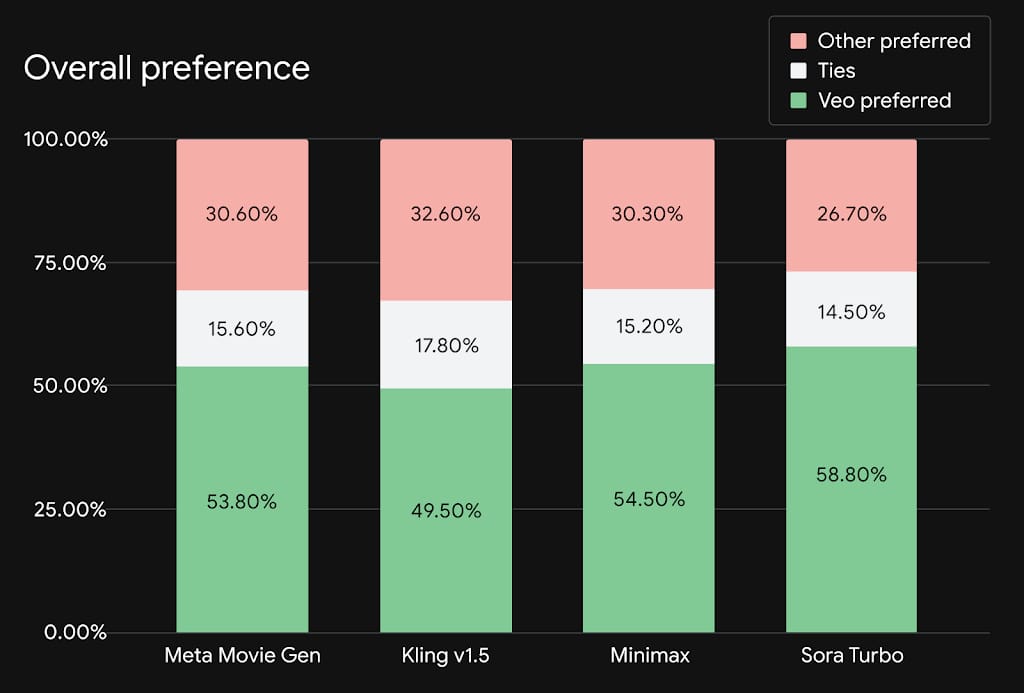

I may not be the only one to think that though, according to Google’s results from Meta’s MovieGenBench video platform, users preferred Veo 2 outputs nearly 50% of the time over Sora and other results when shown more than 1003 videos.

But more importantly, videos generated by people on X.com that already have access to Veo 2 are showcasing realistic results for themselves, results not curated by Google.

Sora was incredibly impressive when it first debuted in February and it’s insane to think that since then, in less than a year, at least 5 other competitive models have sprung up, and some have even surpassed Sora in capability.

It may be unsurprising that Google has pushed past OpenAI in video capability considering they own one of the largest video platforms on the planet with YouTube.

And rumor has it, Google has been playing with AI concepts for years, even being the birth place of the transformer architecture that has paved the way for this AI boom.

Google and Alphabet have enough resources to remain one of the biggest players in the Artificial Intelligence game, but more on that later.

For now I’ll be waiting for my email from Google that I have access to Veo 2.

So long Sora! You had a great 1 week run.

Google’s On Top

I have to give credit to Wes Roth’s video for pointing out how Google is really pushing ahead in the AI race.

Recently Google has been topping the AI benchmarks in a number of categories, and I’m ashamed to admit I haven’t been paying attention.

I think it’s because their rollout of the various versions of Gemini has been so scattered.

The few times I’ve used Gemini in something like Google Docs, the results have been lack luster, although it does seem like Google Search summaries are getting better.

I still tend to search with Perplexity first.

MidJourney is my go-to for image generation.

And I’ve been using a combination of o1 models and Sonnet 3.5 for coding and general technical advice, mostly because that’s what’s available in Cursor right now, but maybe it’s time to roll Google’s AI into the mix.

Gemini in the top 2 spots

Beyond that, Google is working on additional projects:

mobile integrated personal AI assist with Pixel 9 and Project Astra

extending the personal assistant idea to the physical world with augmented reality through Android XR and smart devices similar to Ray-Ban Meta Smart Glasses

Jules, an AI coding assist

Project Mariner, a browser-based research assistant and web agent

Deep Research, a more thorough, reasoning based research assistant similar to OpenAI’s o1 models

And of course, the NotebookLM podcast feature, which you can now chat with!

After generating the podcast, and enabling the interactive feature in NotebookLM, you then can click a button to ask a question and the automated podcast voices will recognize your input and smoothly add their response to the conversation before returning back to discussing whatever you had them focus on for the deepdive.

It’s hard to keep track of all the AI projects going on right now, but given that impressive list, I will certainly be keeping a better eye on what Google is offering, looking for opportunities to use those tools in my regular work flow.

More Than Video

It’s a little too easy to take for granted the unbelievable progress with all of the generative models.

I went from being mind-blown over Sora in February to unimpressed with Sora after Veo 2’s release this week.

It’s easy to take that leap for granted because it seems obvious.

Just make more realistic videos.

Shove more video data into the model so it learns to be more accurate.

But the deceptively tricky part of that is these video models aren’t actually visual models.

Yes they are learning how things look, but they are also learning how things work.

They are essentially “world models”.

To generate a realistic looking video, the model has to accurately produce all of the elements in the scene, not just the pet owner’s human figure or the four legged dog that most people are focused on, but the model also needs to know how the snow on the ground crunches underneath them, or how the trees out of focus in the background sway in the wind, or how light reflects off moving Golden Retriever fur at midday, or how a red winter jacket bends with the petting motion of a person’s arm.

It’s an insane amount of data for something as seemingly normal as a few seconds of someone playing with their puppy in the snow.

I can record that scene on my phone at any time.

But to generate that scene from a text prompt is a completely different story.

Marc Andreessen reminded Chris Williamson (and me while listening) of that fact on Chris’s podcast this week.

It’s easy to miss such a huge detail because it’s not immediately obvious.

Text-to-video isn’t just creating moving images, but it’s understanding observable physics to some extent.

With this understanding, it makes both physics research more possible for AI but also enables robots to maneuver through the real world, able to interpret water, light, motion, and the patterns of physical objects.

Not only will we soon be able to generate infinite custom Marvel movies, but we’ll also develop deeper understanding of how the world works, and have robots safely wandering around the world, weaving through crowds, working harder than we could ever dream.

And among all the other things they are working on, Google is also actively applying this concept to rapidly training robots…

Google’s Genesis

As if Google wasn’t doing enough, they also have been pushing the boundaries of real world robotic training, similar to what Nvidia’s Ominverse project hopes to achieve.

Google has an open source project Genesis, or a “generative and universal physics engine for robotics and beyond” taking the “world model” concept from above and stretching it way past entertaining videos.

What’s really crazy is that you can potentially run this model on your personal computer if you happen to have a rather expensive RTX 4090 video card.

This project is listed as multiple things including:

A universal physics engine re-built from the ground up, capable of simulating a wide range of materials and physical phenomena.

A lightweight, ultra-fast, pythonic, and user-friendly robotics simulation platform.

A powerful and fast photo-realistic rendering system.

A generative data engine that transforms user-prompted natural language description into various modalities of data.

Another project I had no idea Google was working on but it’s readily available for download, and ready to apply a general understanding of the physical world to whatever you want to prompt it with.

I might have to finally invest in a robot to really start testing some of these ideas out.

The future is here folks and it’s only going to get wilder.

The Next Leap

I’m glad to see Google making huge strides in AI.

For a time seemed like Google was in maintenance mode, prioritizing profit over building new and better technologies.

But OpenAI came along with something that you could ask a question as you would ask another person, and it would not only understand, but provide a person like answer, something that Google had failed to properly accomplish first.

And it seemed like OpenAI shook Google to it’s core.

Suddenly they might lose their technological dominance.

They were forced to make progress by a single company’s technological breakthrough, and now Google is producing all kinds of progress as a result.

But OpenAI is also continuing to push forward.

Their 12 Days of Shipmas has produced some amazing features.

Here’s a list generated by Perplexity:

Day 1: Full release of OpenAI's o1 model and ChatGPT Pro.

Day 2: Launch of a Reinforcement Fine-Tuning Research Program.

Day 3: Introduction of Sora, a text-to-video AI tool that generates clips from user input.

Day 4: Expansion of ChatGPT's Canvas feature to all users with new functionalities.

Day 5: Integration of ChatGPT into Apple devices (iPhone, iPad, macOS).

Day 6: Launch of advanced voice features, including a Santa-themed voice mode.

Day 7: Introduction of Projects in ChatGPT for organizing and customizing conversations.

Day 8: Enhanced web search capabilities in ChatGPT with citations.

Day 9: Developer-focused updates including improvements to the Realtime API and new fine-tuning methods.

Day 10: Launch of the "1-800-CHATGPT" service for user interaction via phone.

Day 11: Video and screen-sharing features in ChatGPT's Advanced Mode.

Day 12: Unveiling of OpenAI's latest reasoning model, o3, designed for advanced problem-solving in math and science.

Not only did they just release access to o1 this month, but they also previewed their next model, o3.

OpenAI claims that o3 reaches even closer to the dream of AGI, an entity that reasons like a human.

o1 already is nearing that idea, it handles vague direction much more thoroughly than any of the other models I’ve chatted with, but there’s still a ways to go.

I think the goal for Artificial General Intelligence is that you could give it a very broad instruction like “go discover new physics” and it go do research on it’s own, eventually coming back with one or more new discoveries in physics, ideally without the aid of any humans, or maybe some human help with physical world access if it needed.

I’m not sure o3 is there yet though, but it’s working toward that goal.

It will have the primary model, o3, but then can delegate various tasks to 3 versions of o3-mini (low, medium, and high reasoning) which can be used depending on the complexity of the task. The more complex the task, the higher the reasoning required.

Then full o3 model will have the highest amount of reasoning power so that it can evaluate the results from o3-mini.

According to an AGI benchmarking test, o3 has well outperformed all previous versions of OpenAI’s models.

I would imagine the tests are rather complicated since it took more that $1000 of compute power for the o3 to try to complete the task, but it completed 88% of which is more than double the progress of o1 at it’s highest capacity.

o3 is set to release at the end of January and I’m hoping it will be available to ChatGPT Pro memberships so I can throw complex tasks at it, just maybe not $1000 tasks.

And that’s it for this week! Google is moving to dominate the AI space, but OpenAI remains a leader.

Those are the links that stuck with me throughout the week and a glimpse into what I personally worked on.

If you want to start a newsletter like this on beehiiv and support me in the process, here’s my referral link: https://www.beehiiv.com/?via=jay-peters. Otherwise, let me know what you think at @jaypetersdotdev or email [email protected], I’d love to hear your feedback. Thanks for reading!