The Weekly Variable

App development finally expands to a new domain that I had been avoiding until now.

Less huge AI model releases but lots of automation potential.

Topics for this week:

Service Layer

In typical software fashion, Wave is still a few weeks out from being released, but it may be down to it’s last major feature.

Up to this point, the app itself consisted of 2 parts: a client and a database.

React Native covers the app portion and Supabase covers the backend needs.

Technically Supabase is a “Backend as a Service” (BaaS) where they could take care of all the apps backend needs, but I’ve done my best to avoid that.

There’s been a few close calls where I almost used their Edge Functions to host a few custom APIs to call from the app, but I managed to avoid it so far.

I really only chose Supabase to get the app up and running, but ideally I’d like to transition to my own custom database and my own custom service layer.

And with Push Notifications becoming a priority, it’s time to finally build that service layer, and not use Supabase to do it.

The rough idea right now is a Go container hosted on AWS App Runner to lay the foundation for a scalable backend.

Gemini Pro approved:

Gemini Pro conclusion

As much as I’ve been fighting it to keep the app scope minimal, I’m very excited to start working on a scalable service layer.

Lots of fun potential.

Automated Results

I’ve fallen behind on YouTube uploads, but the channel still continues to do it’s thing.

Not as many comments this week, but still a decent amount of subscriber growth and nearly 1000 views rolled in despite not much activity on my part.

There’s an easy win video I’ll probably get posted this weekend but more on that later.

YouTube Channel stats

Nearing the 500 mark on subscribers, nearly half-way to the goal of 1000 total, all with pretty sub par effort.

A few uploads this week and this trendline should pick right back up.

OpenAI’s Image API

Speaking of Automation tutorials, I’ve been waiting for OpenAI’s new image gen service to get an API.

Granted there are a few other image generation services out there that are pretty solid too so there may have been alternate solutions, but up until this week, the only way to use the OpenAI’s image gen was through the browser.

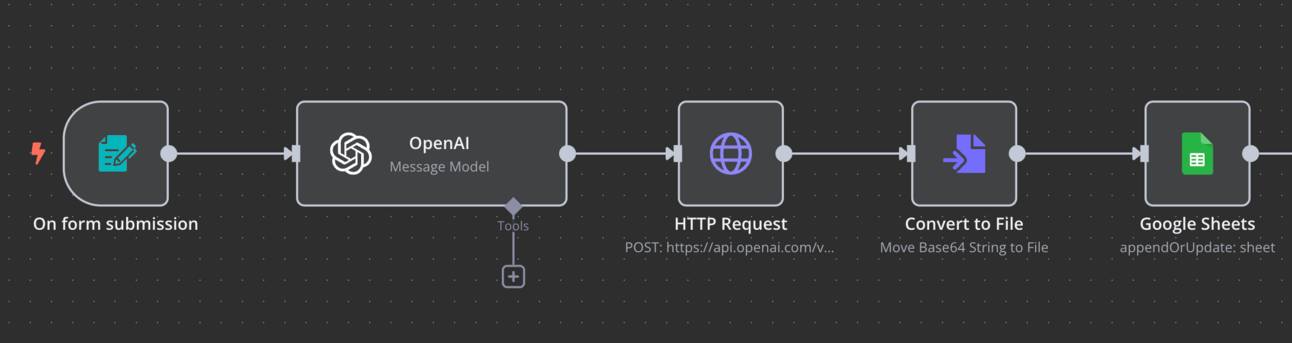

If you wanted to call the service through n8n there wasn’t a way to do that until Wednesday.

n8n call to OpenAI’s image service

I’ve had my eye on a video to automate LinkedIn carousels and this should be just the tool to do that.

A quick test with a low effort, no context prompt produced this:

Plenty of room for improvement with a proper prompting system, but a really promising start.

Looking forward to building out that automation (and video!) soon.

MCP

Model context protocol has been one of the hot trends in AI recently, at least for the automation space.

Platforms like n8n and Make.com make it much easier to structure regular calls to an AI and provide tools like Gmail and or a Calendar for the AI to interact with, but each different function in that app like Gmail requires a separate definition.

26 Options for Gmail

Due to the nature of APIs, each of these needs their own “node” to be able to interact with that functionality correctly.

Then the AI needs to have connections to each of these nodes that you want it to use so that it can properly send and retrieve data between them.

MCP attempts to resolve that problem by providing one big document that shows it all the ways that an AI can interact with just “Gmail” rather than having to connect up each step like:

“Get Message”

“Create a label” for that message

“Mark a message as read”

“Reply to a message”

Looking something like this:

Multiple Gmail nodes

To something like this:

MCP HTTP Request

With an MCP server, the AI can get context into all the things it needs to do, including proper documentation and examples for how it should be interacting with all of Gmail, rather than each individual API or functionality of Gmail.

MCP can be a really powerful tool for making AI’s much smarter with working with other apps, so it’s worth a full video breaking it all down.

I’ve done a little research myself, but haven’t tested it out much yet so that will also be high on the list of upload todos.

More on MCPs to come but for now you can read more about Anthropic’s approach as the MCP leader.

The AI Council

Despite no major AI model releases this week (that I know of anyway), I still had models on the mind.

More and more I turned to different AIs for different uses, so I kept thinking about how I need a way to talk to a few at once, propose one question and get answers from each of them.

Of course this could be done by writing out the question once, opening multiple browser tabs, and pasting it into each one, but that’s not the most fun or efficient way to do it.

I’ve started down the AI Pipeline route before, writing it from scratch in Go, but I decided to just use n8n this week to get a quick idea going because the goal really was to ask GPT-4.5, o3, o4-mini, and Gemini Pro technical questions and get all their opinions.

So I set something up to do that:

AI Council in n8n

Using telegram, I can “@” different models specifically or groups:

@gpt-4.5

@o1-pro

@gpt-4.1

@o4-mini

@gemini-pro

@o3

@tech (gpt-4.5, o3, o4-mini)

@summarize

“@tech”, which will get responses from each.

“@summarize” will call o3, GPT-4.5, and o4-mini-high, wait for all their responses, then use Gemini Pro to read those responses, and summarize them with it’s own input.

Kind of a fun project that I’m sure I’ll do a video for soon as well, but I’m not sure how practical it actually is.

Some of these models are pretty expensive to operate, so depending on the perspective, it might not be worth spamming questions to all these APIs, when some can cost up to $.20 for one response.

But for big technical questions it could be nice to have.

The benefit of the browser is that all of them are free to use right now (assuming you have a pro subscription for OpenAI), so overall it’s probably worth the hassle of multiple tabs than one connected conversation, but we’ll see.

I’ll test it out more this week and decide if this is something that’s worth a little extra cost to use regularly.

And that’s it for this week! Service layers, image generators and a council of AIs.

Those are the links that stuck with me throughout the week and a glimpse into what I personally worked on.

If you want to start a newsletter like this on beehiiv and support me in the process, here’s my referral link: https://www.beehiiv.com/?via=jay-peters. Otherwise, let me know what you think at @jaypetersdotdev or email [email protected], I’d love to hear your feedback. Thanks for reading!