The Weekly Variable

Turns out automatically captioning video is a little tricky, but not that tricky for GPT.

Topics for this week:

Brand Building at Scale

Initial progress for the AI pipeline was moving at a brisk pace but hit a bit of a slow down with the video captioning process.

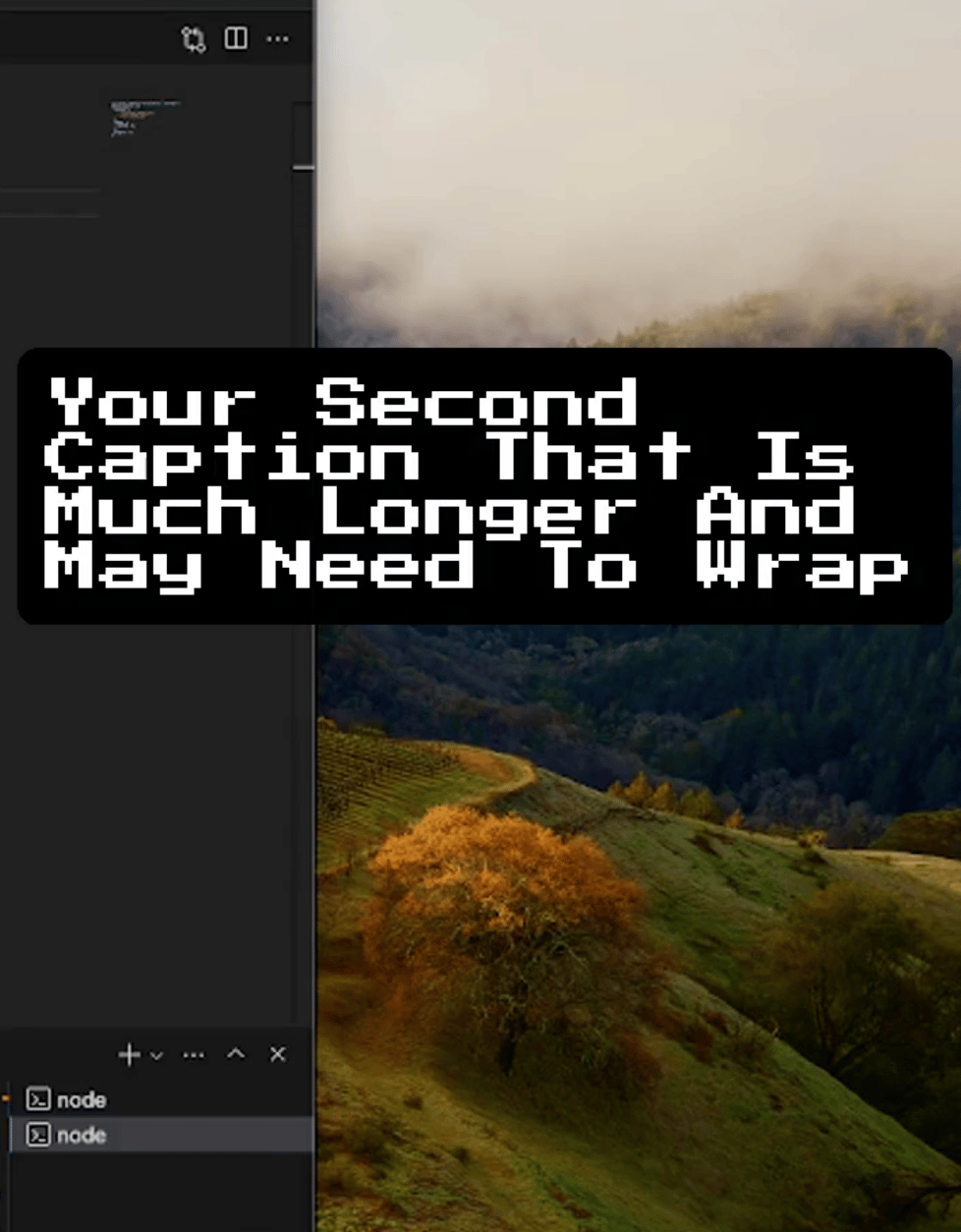

I’ve been on a mission to figure out how to create captions like the one below ever since I saw them and made the connection that ffmpeg probably creates those captions.

After a little digging, I figured out that font is called Montserrat and started seeing it everywhere, and that ffmpeg really has 2 ways of adding captions like that.

Either it uses a custom caption file or you have to overlay custom graphics on top of the video.

I’ve spent a good chunk of this week trying to programmatically do both approaches but it’s proving not as straightforward as I would have guessed.

So far these are 2 of my better candidates:

Option 1 - Subtitle File

Option 2 - Python generated

I’m leaning toward the caption file option because it’s a little more straight forward, it requires less tweaking to get the captions to position and display how I want.

But the custom graphic approach with python offers much more animation options.

Ultimately it will most likely end up being a combination of the 2 options depending on how fancy the captions needs to be.

Until then, I’ve got some clips to cut and caption, but I’m closer to checking the “caption video” box on the big todo list of the AI Pipeline!

Build vs Buy

While I’m putting myself through all the work of figuring out how to caption my own videos using code, I’m well aware that CapCut is a really popular tool for captioning I could use right now, and Opus.pro for clipping shorts from long form videos.

There’s also a handful of other options like veed.io, inVideo.io, and even a free option from Adobe Express that could all get the job done.

But those options aren’t good enough.

They’re all some version of “freemium” where the app is technically free, but as soon as you want to do anything beyond make 2 test videos, you’ll have to pay quite a bit.

And even then, you’ll run out of tokens or hit limits very quickly.

At least for the number of videos I have in mind anyway.

I want to cut and caption videos at scale without paying at scale.

But may be not.

I’m at a bit of a cross roads for solving this marketing issue.

I see two options:

grow faster now with other tools

grow slower with my own tools

I could use something like Opus.pro to create short video clips (with captions) and get the YouTube and other algorithms working now because that will take time.

It may be worth the initial investment of using some of those apps to get the brand ball rolling because I’m betting it will take about 3 to 6 months of daily uploads to hit about 1000ish followers on YouTube, depending on clip quality.

Or I could take the time to finish building my own process and then use it to inject content into YouTube.

I’m leaning toward prioritizing the habit of posting everyday again while I’m also coding at the same time.

Seems like an efficient procedure to me.

We’ll see, though.

By next week I’ll make a decision based on how much progress I’ve made.

If I have a rudimentary system for finding, cutting and captioning clips on my own, I’ll go with my custom and cumbersome process first.

But if I’m not at that point by next week, I may look at throwing some money into paid options to build momentum.

I’ll keep thinking about it, but let me know if you have any better ideas!

o1 Strawberry

I briefly mentioned last week that OpenAI dropped o1-preview (o1 was referred to as Strawberry before release) for paid users and now that I’ve had a chance to test it, I’m impressed.

Claude Sonnet had been my go-to for programming help lately because it’s been about 85% accurate.

I keep trusting it to do what I need it to do, and it’s usually able to deliver first try or within 2 or 3 attempts.

Working with custom captions has been more of a challenge for Sonnet lately, although I may not be clearly explaining the desired outcome either.

I hit the message cap the other day, and Anthropic told me I had to wait an hour and half to be able to use Sonnet again.

Certainly wasn’t going to wait for that, so I took the caption script that Sonnet was struggling with and sent it over to o1-preview, which o1 completely corrected first try.

o1-preview fixing Sonnets script

It only had 1 minor visual issue which it fixed after a second prompt.

o1-preview correcting it’s mistake

I was hoping for that result but not expecting it.

The result was the screenshot I referenced earlier:

Caption 2 from o1-preview

That’s just one use case, but it’s pretty amazing to see o1-preview take 15 to 30 seconds to “think” about what it’s going to do - essentially generate an outline of steps and notes about the problem and how to approach it and then produce a successful outcome.

The only downside with o1-preview right now is access is very limited.

I only get 50 prompts per week which I could easily eat up in a hour.

I think I’m already at 8 or 9 without realizing I had such a limited amount.

I’ll be playing around with o1-preview more selectively while also waiting for OpenAI to surprise announce that it’s no longer preview so I can get back to properly spamming AI with questions.

Action Over Outcome

I love Dr. K so I’m derailing the AI talk for a minute.

Listened to another episode of Mel Robbins with Dr. K this week, and I would highly recommend a listen.

They have a great dynamic which makes it more entertaining as well as useful.

In this talk, Dr. K tackles finding motivation and how hard that can be.

Really he says you don’t want motivation because it’s too emotional or unsustainable.

Instead it’s simply about separating action from expectation, which allowed him to apply to med school more than 100 times and keep going.

He didn’t get discouraged and quit after 5 or 10 or 50 rejections because the goal was to submit the application, not get in.

I’m sure he did feel some frustration, but he kept going kept focusing on what he could control, which was the action, and eventually he was accepted into a program.

Another perspective I’ve heard is build systems not motivation.

The concept applies nearly every where and it aligns with some of the other concepts Dr. K usually covers in that a person really can only control themselves.

Ultimately, if the right action is the goal, then the outcome will develop as a result.

So take action and listen to this talk:

AI Deep Dive Podcast

And to wrap things up with AI, things are getting a little scary.

Wes Roth showcased Google’s NotebookLM “Audio Overview” feature that creates AI summaries about whatever documents you upload, and it sounds like a real podcast.

It’s wild.

Google only provides the audio, but Wes took it a step further and used HeyGen to bring the voices to life.

The line is blurring on what’s real and what will be AI.

So naturally I threw this newsletter into NotebookLM to see what it does and it’s truly fascinating.

It gets very meta, where they pretend to listen the audio clip of themselves.

Very mind-bendy.

Things are only going to get more interesting, that’s for sure.

Enjoy:

And that’s it for this week! AI captioning, clipping and podcasting. Fully automated media empires are not far away…

Those are the links that stuck with me throughout the week and a glimpse into what I personally worked on.

If you want to start a newsletter like this on beehiiv and support me in the process, here’s my referral link: https://www.beehiiv.com/?via=jay-peters. Otherwise, let me know what you think at @jaypetersdotdev or email [email protected], I’d love to hear your feedback. Thanks for reading!